Summary / TL;DR

Google crawls websites at varying frequencies, often ranging from 4 days to a month, depending on factors like content freshness, site authority, and crawl budget. Crawling is the process where Googlebot visits websites to collect information for indexing. Pages with updated content, strong internal linking, quality backlinks, and well-structured data are prioritised. Google does not automatically crawl all pages and may skip those blocked by robots.txt, protected by passwords, or identified as duplicate URLs. Site owners can request recrawls via Google Search Console and monitor crawl activity using its tools to optimise indexing performance and visibility in search results.

Quick Answer:

Google’s automated tools, often referred to as ‘crawlers’ or ‘spiders’, continuously scan the internet to index websites. The frequency of this crawling can differ due to factors like content quality, relevance, backlinks, and overall site performance. Generally, many websites are crawled several times over a few weeks.

Google is the world’s biggest search engine and crawls sites continually to update their index. This helps Google index your website and find new content, but it can also cause duplicate content issues if you don’t take precautions to prevent them. Read this post to learn how often Google crawl site operations occur and the risks of indexing too many pages.

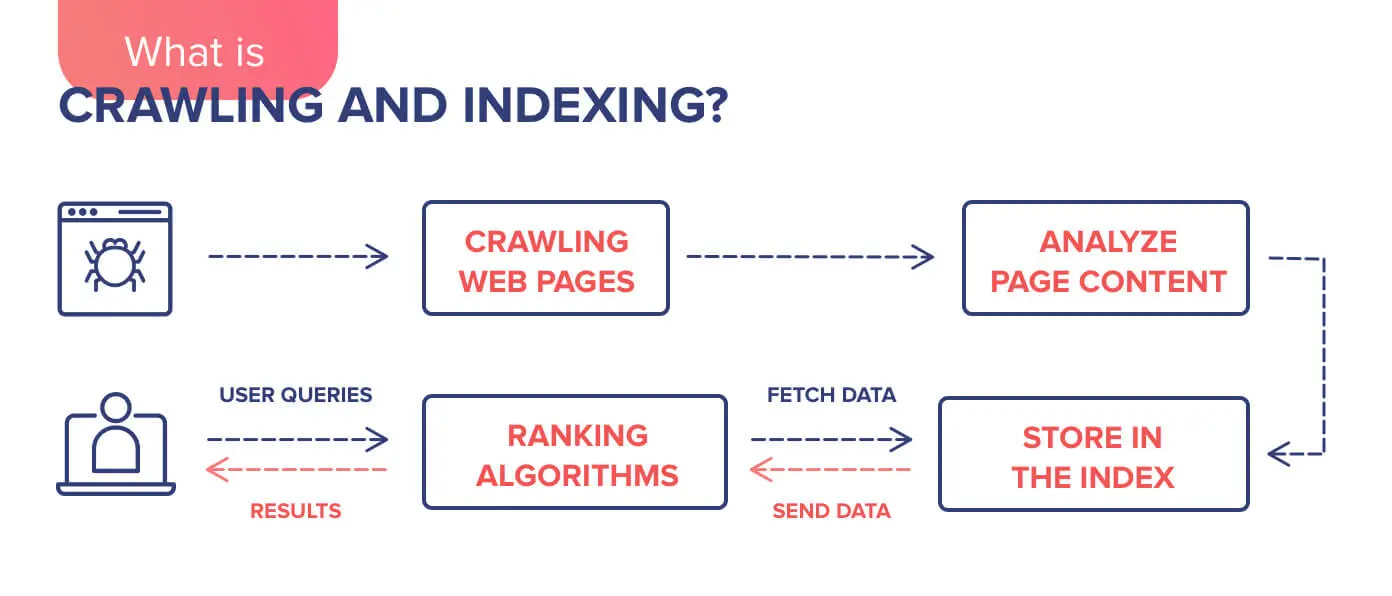

Anyone who has dabbled in SEO has undoubtedly heard of “crawl” and “index.”

Crawling and indexing are two indispensable components of technical SEO that help when you crawl a website to rank and make it accessible to a user. While you might know the basics of the two terms, many factors encourage Google to crawl your website or increase the level of indexing.

Website owners often ask common questions about Google crawl frequency, such as how often Google crawls after making changes, how long the process takes, and how they can speed it up. Before we answer these questions, it makes sense to introduce you to the concept of crawling.

So, let’s get started!

Want to receive updates? Sign up to our newsletter

Each time a new blog is posted, you’ll receive a notification, it’s really that simple.

What Is Google Crawling?

The Basics Explained: Crawling and Indexing

Crawling is a vital part of the SEO world. Google sends bots to explore your website, reading its content. When Google bots check out your site, your content could potentially rank online.

a widely accepted concept is that Google and other search engines, which range from Google to others, also called spiders, have a lot of bots with the right to crawl through the whole Internet.

However, search engines don’t discover the pages on their own. To submit a sitemap to Google, website owners must submit a list of pages and a sitemap to get Google to crawl your site.

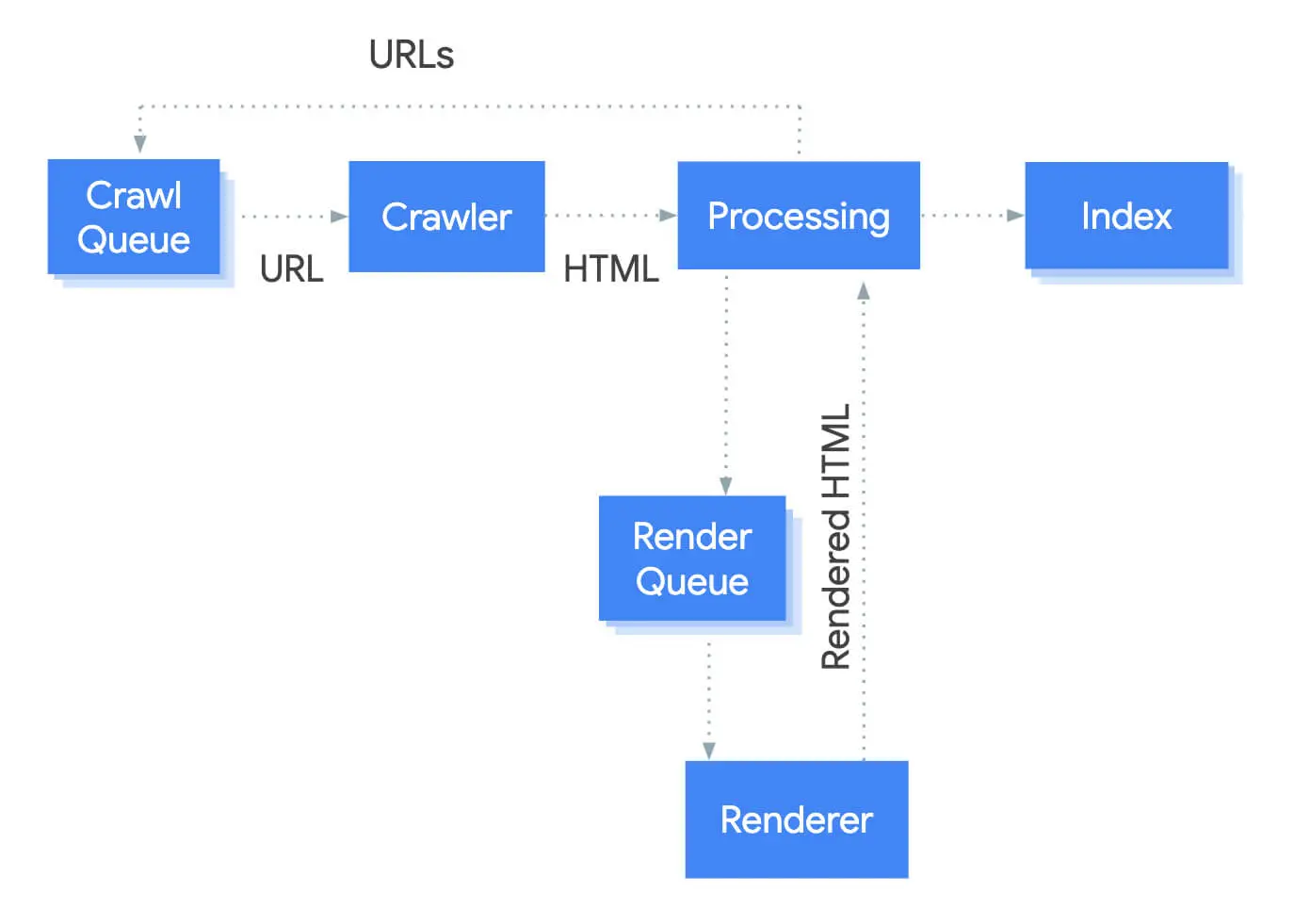

After discovering a URL, Google sends its crawlers to the page to assess new text and multimedia content. This helps decide if the page merits indexing.

After a crawler visits your website and analyses the content, the link is stored in Google indexes for others to explore. We note this process as indexing. Thus, the billions of pages are stored in a comprehensive database, and without access to the URLs, Google’s algorithm cannot discover or rank your content.

If there are new and updated pages you want in the Google index, you can submitting or requesting indexing through the Google Search Console.

Does Google Crawl All Websites?

Google cannot crawl your site independently—it needs specific links and a sitemap to determine your site’s existence. Additionally, it does not crawl all pages. It starts with a couple of pages that Google crawled and follows the URLs present to discover new sites across the web.

The Google bots crawl billions of web pages and add links present on these pages to its database. As a result, Search engines discover new sites and links posted and use them to update their index.

Once Google has set its discovery process and discovers a new site, it sends a crawler to analyse the pages and see whether it has relevant and fresh content. It also examines the images and videos to assess what the page is all about.

A blog post or article crawled and indexed by other pages will likely be treated as an authority on a particular topic and indexed faster.

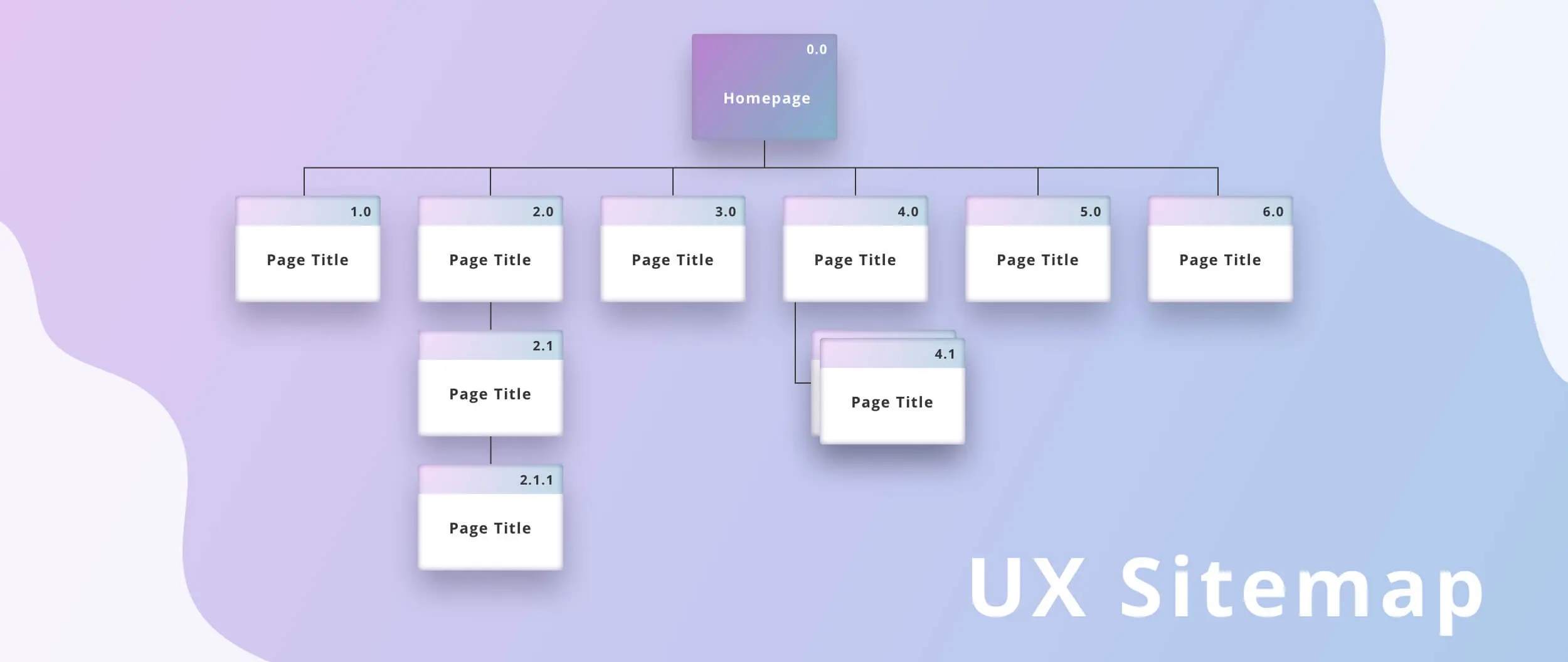

Thus, to ensure your site gets indexed and achieves a high website ranking on Google’s SERPs, create an exciting and relevant site structure that appeals to user intent.

If you own a site, you can ask Google to crawl your updated pages via Google Search Console. Just upload an XML sitemap with your desired URLs through the search bar to get them indexed. It’s also a way to notify Google about any changes you’ve made and request a re-crawl.

Which Web Pages Are Not Crawled?

As stated earlier, Google uses a sitemap to access and index updated content. It crawls through billions of website pages daily, and if your site has hindrances preventing crawling, Google will stop sending bots over to your page.

This will affect indexing and your overall ranking. When bots cannot access your updated blog or article, your place in Google’s SEO rankings is reduced. Although the bots work efficiently to crawl pages, there are certain instances where a web page will not be crawled, and you cannot get Google to index them.

These situations include pages that are not accessible to anonymous users. If your page has password protection and is not open to random web users, the chances of a Google crawl are prevented. If your page has been previously crawled or has a duplicate URL, bots will not crawl it frequently.

Sometimes, robots.txt blocks certain pages, and Google cannot crawl them. So, check whether your URL has been blocked and make the necessary changes within the recommended 90 days.

How Can I Tell If Google Has Crawled My Site?

Google will check your site when it has the link and access. But how do you know if Google’s been there? The answer’s in your Google Search Console account.

This tool helps site owners check whether Google bots visit their page and tell Google to request indexing for your site or page on demand manually.

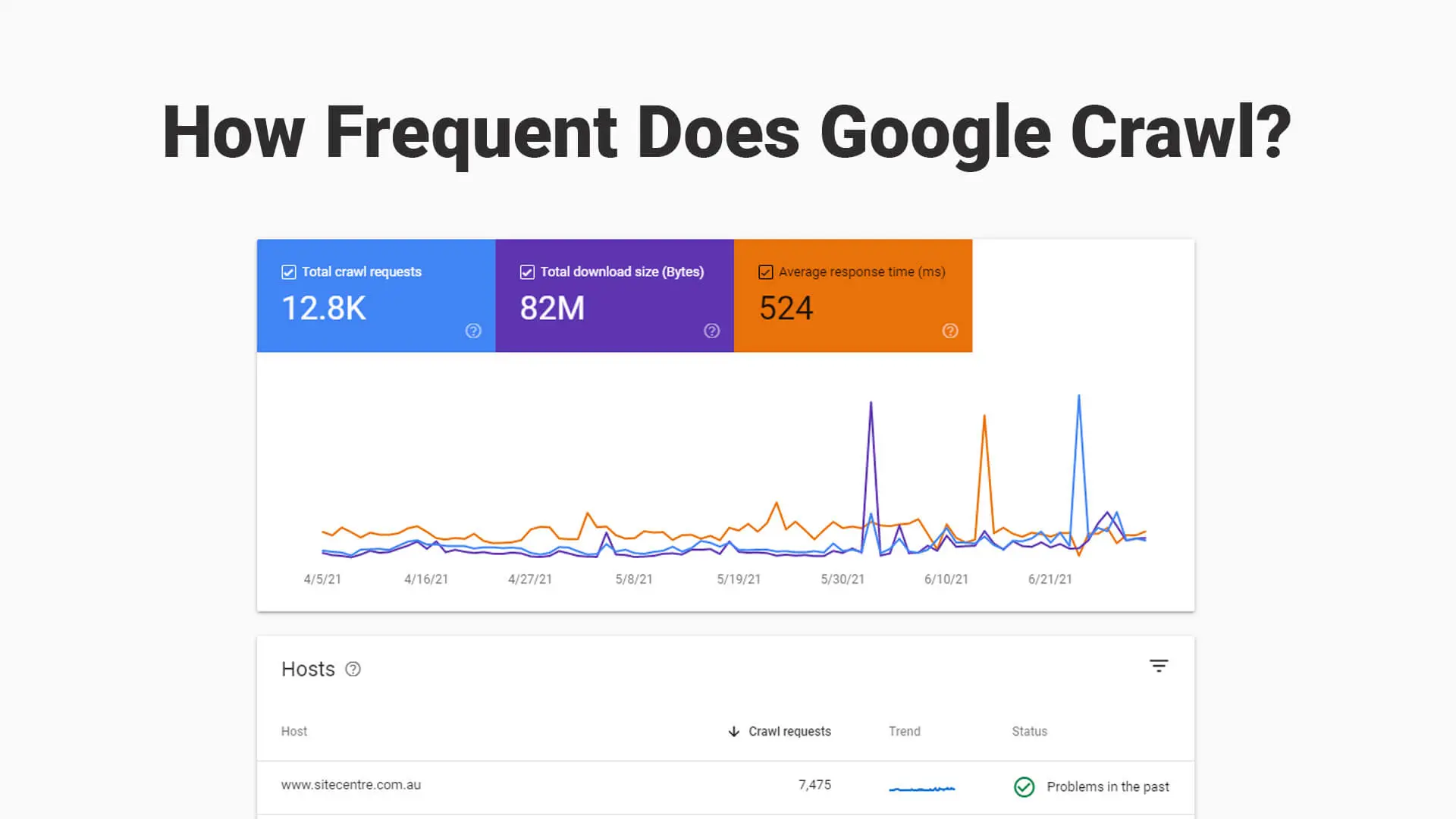

Through your Google Search Console account, you can monitor Google visits by checking the last crawl performed by Google bots, answering “When was the last time Google crawled my website?” with a detailed crawl stats report and how often they crawled your page.

These crawl stats help you understand your site’s index potential and what updates and changes you should make to improve SEO and get your content to rank higher.

In addition, you can ask Google Bot to re-crawl your pages through the search console, address “How do I get Google to crawl my website again?”, and limit your crawl rate. One of the latest updates Google introduced was the URL inspection tool, which gives more transparent information and helps site owners understand how Google views a particular site.

Upon entering your link in the Google Search Console, the engine provides data about when Google last crawled, crawl errors encountered during the crawling or indexing process, and other pertinent information.

Although Google Analytics can help you segregate your traffic and determine what appeals to people who visit your site, you must know how to deal with your website content. We’ll talk more about this in the next section.

How Can I Make Google Crawl My Site Faster?

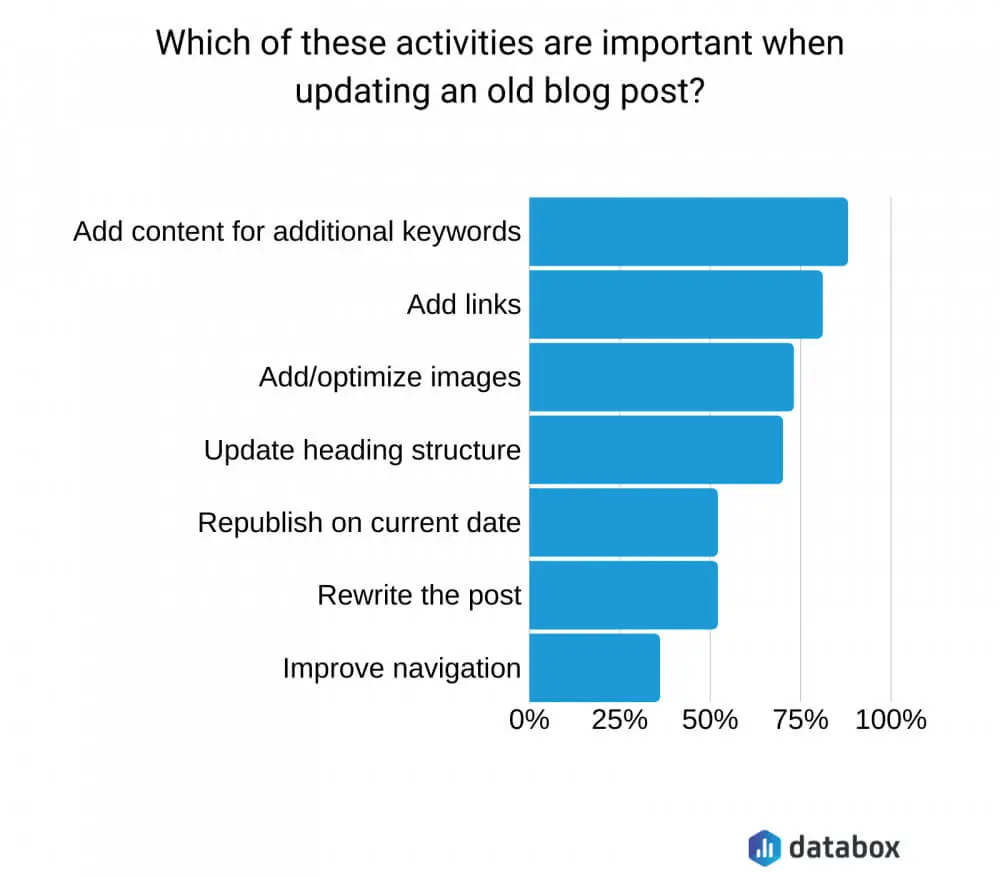

There are specific ways to improve your web content’s crawling frequency. These have been listed below:

1. Update Your Site

Add fresh content and new material to your site to boost the crawl rate. You can add relevant content to the blog section of your page and update it frequently with information pertaining to your industry.

Remember to include videos, pictures, and graphs to improve readability. Ensure to provide the latest publish date. For instance, if you are a digital agency, you can write about SEO, Google Ads, etc.

2. Use Sitemaps

Merely updating content isn’t enough — you must submit these pages to the search engine results page.

Inform the Google Search Console about your updates using an XML sitemap. You can also link internal links to a new page to your existing pages that have ranked well. This helps bots discover and crawl your site faster. Besides, ensure your site is mobile-friendly.

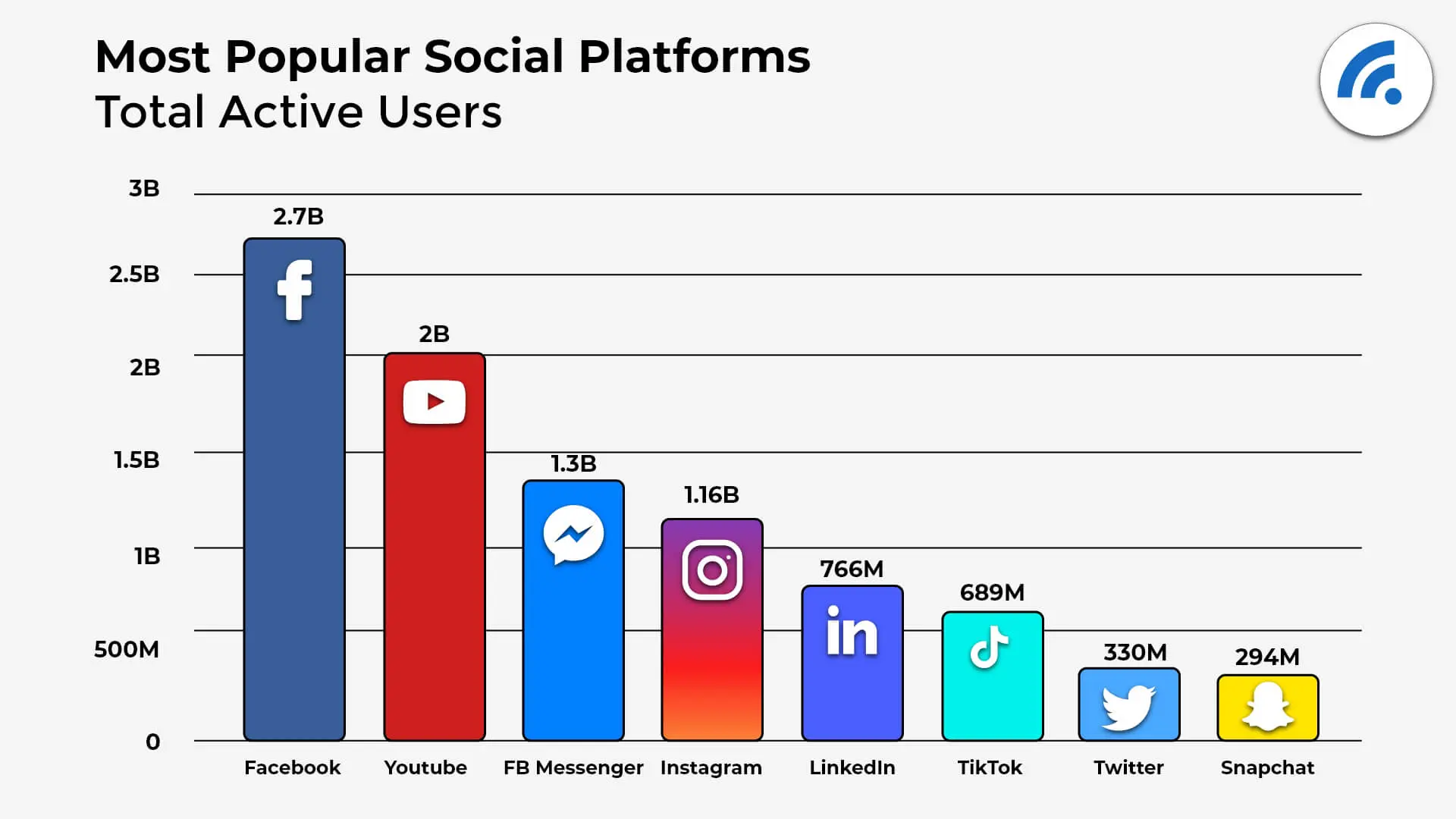

3. Share Content

Sharing is one of the surest ways to draw attention to your site. Share your content on social media platforms and within industry communities. You can also piggyback on the popularity of other sites by offering guest posts.

4. Use Internal Linking

Internal linking is another way to get search engines to notice your page. When you want Google to crawl, other websites share or publish links to your content, it alerts Google and rewards you by sending bots over to your website that aid in the indexing process.

The higher the number of backlinks your page has, the higher the chance of attracting organic traffic to your website. Of course, the condition here is that your content needs to be reliable and credible.

5. Keep Your Data Structured

Ensure your data is SEO-compliant, incorporating proper meta tags, engaging titles, and regular page updates. Google uses its resources intelligently, so if your page is not active or accessible or your data isn’t well-structured, the search engine stops sending bots to it.

As a result, the chances of a user finding your site are minimised, and your index rank is reduced.

How Long For Google To Crawl Site?

What bothers you is, “How often does Google crawl my website?” The short answer is that it is nearly impossible to know the exact rate; keep in mind, even if you are an expert in the digital marketing field.

But, we shall discuss further that certain factors can play a crucial role. Frequent website updates, domain authority, backlinks, etc., help increase the crawling frequency.

There is also something called a crawl budget that determines the number of pages Google bots crawl on your site during a particular timeframe. Optimising your crawl budget for better website indexing by adding new pages and eliminating dead links and redirects is important since their presence in your sitemap can impact your site’s indexing.

Summing Up: How Long Before Google Crawls My Site?

Google’s bots frequently crawl internet pages within months. To Google crawling, your site adds them to their indexed database and updates the SERPs.

The exact algorithm is unknown, but there are ways you can ensure your site gets crawled more frequently and regularly. Naturally, the more visitors you get, the more authority backlinks Google’s crawler will recognise by your page, and the higher the crawling rate. This will result in a higher ranking on SERPs.

Besides, ensure your page is fast and enhances user experience while avoiding server connectivity errors. The importance remains in the quality of your content, landing pages, and the visual layout of your webpage. It can take Google anywhere between 4 days to a month to crawl and index your site.

So, be patient and adopt suitable strategies that improve the crawl rate!

It’s important to know SEO basics, but it’s also important to understand what is happening behind the scenes.

Crawling and indexing are two indispensable components that help your content rank and make it accessible for a user.

Many factors influence how quickly a website’s crawling rate increases and is indexed, including link popularity, site speed, bandwidth availability, page load time, etc.

Google will change its search results; there’s a good chance it will occur at least once daily. If you want more information about crawling and indexing ranking factors and other aspects of digital marketing, we offer services such as search engine optimisation (SEO) consulting or web design in Australia; call us at 1300 755 306.